October 2021 Update

Tech Against Terrorism Updates

- We are hiring! We are looking for a PR Manager to help communicate our work to key stakeholders. For more details and how to apply, see here.

Publications

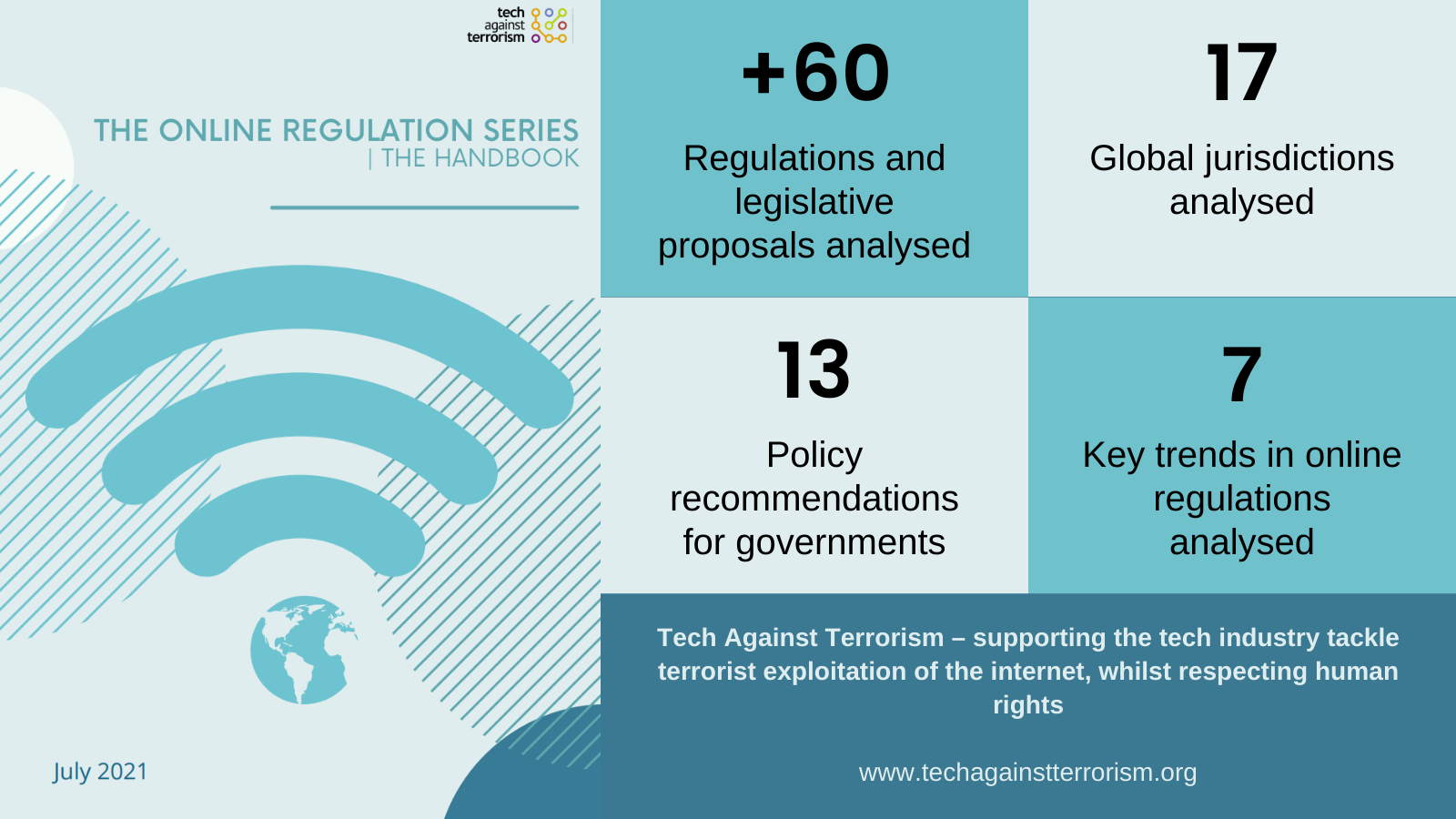

- Our Head of Policy and Research, Jacob Berntsson, and Senior Research Analyst, Maygane Janin, wrote an article for Lawfare, discussing global online regulation of terrorist and harmful content and what policymakers should keep in mind when designing legislation. If you would like to read more on this, see our Online Regulation Series Handbook.

- We submitted a response to the consultation on the Criminal Code Amendment (Sharing of Abhorrent Violent Material) Act 2019 (AVM Act) passed in Australia in 2019.

Webinars

- Thank you for joining our webinar last week on “Online terrorist financing: assessing the risks and mitigation strategies”. If you were unable to attend and would like access to a recording of this webinar, please get in touch with us at contact@techagainstterrorism.org

- Agenda:

- Florence Keen, Research Fellow at the International Center for the Study of Radicalisation, and PhD Candidate in War Studies at King’s College London

- Audrey Alexander, Researcher and Instructor, West Point’s Combating Terrorism Center

- Julie Lascar, Public Policy Manager at Facebook

- Moderators: Maygane Janin, Senior Research Analyst, Tech Against Terrorism; Erin Saltman, Director of Programming, GIFCT

The Terrorist Content Analytics Platform

- Thanks to all who tuned in to our TCAP November Office hours. If you were unable to attend this month and would like access to a recording of the session, please get in touch with us at support@terrorismanalytics.org. You can also request a copy of past Office Hour recordings on our website here.

- During October we developed our hashing capabilities. All content submitted to the TCAP is now passed through our hashing function to produce a distinct digital algebraic record of content without storing any identifiable information of the original content.

- We have just published our monthly TCAP Newsletter with all our lastest updates. The newsletter can be found here.

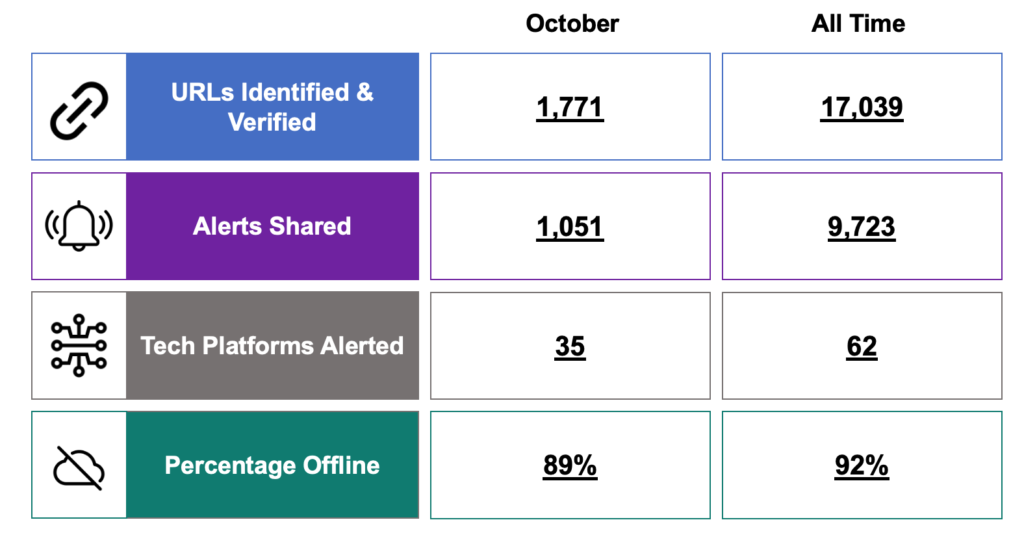

- As of October, the TCAP statistics are:

Speaking Events

- Tech Against Terrorism collaborated with the Commonwealth on delivering a series of inter-governmental workshops for policy makers in Sub-Saharan Africa.

- Our Senior Product Manager, Sophie Laitt, presented at a Safety Tech Innovation Network event on how to protect online systems against illegal content, highlighting the work of Tech Against Terrorism and the Terrorist Content Analytics Platform (TCAP).

Media Coverage

- Our founder, Adam Hadley, was recently quoted in a Daily Beast article about content moderation on small tech platforms, with a particular focus on the difficulties of moderating far-right violent extremist content. Please see the article here.

- Senior Research Analyst, Maygane Janin, spoke about the challenges tech companies face when moderating terrorist content and discussed the use of content moderation avoidance techniques by terrorists and violent extremists. Read the article here.

What's up next?

- We are very excited to announce that we will be opening up parts of our Knowledge Sharing Platform (KSP) for public view. The spotlight resources will focus on Online Regulation. Keep an eye out for more updates on this and when the website will go live.

- On Tuesday, 23 November, we will be hosting our next webinar in partnership with the GIFCT, on “Countering terrorist use of the internet, moderating online content and safeguarding human rights”. You can register for the event here. Please stay tuned for upcoming agenda details.

- To commemorate the one-year anniversary of the TCAP, we will be publishing our first annual TCAP Transparency Report, outlining the implementation and yearly statistics of the TCAP. Stay tuned for this in the coming month.

Tech Against Terrorism Reader's Digest – 5 November

Our weekly review of articles on terrorist and violent extremist use of the internet, counterterrorism, digital rights, and tech policy.

Top Stories

- This year marks the 20th anniversary of the adoption of United Nations resolution 1373 (2001) and the establishment of the UN Counter-Terrorism Committee. As part of the anniversary, the UN Security Council has published 20 committee achievements from the past 20 years.

- Facebook has stated that is has shut down a troll farm run by the Nicaraguan government which was supposedly posting anti-opposition content and misinformation. 937 accounts, 140 pages, and 24 groups were closed within the last month as part of the operation.

- Microsoft has acquired Two Hat, a content moderation provider, with the aim to use Artificial Intelligence to moderate content on Xbox. The acquisition is designed to improve Microsoft’s content moderation in gaming and consumer services.

- Facebook is shutting down its facial recognition software (“faceprints”) on the platform, citing security concerns and stating that there needs to be stronger privacy and transparency controls for users in the future.

- Facebook has given access to is content reporting system to the Kazakhstan government, in order to strengthen relationships between Facebook’s new parent company, Meta, and government bodies.

- The European Commission, Parliament, and Council have started to negotiate the final version of Europol’s new mandate, which seeks to increase the agency’s cooperation with private corporations/parties and increase their ability to process large personal data sets to assist with investigations. The mandate could be ready for December, according to Slovenia’s interior minister Aleš Hojs.

- Brett Solomon of Access Now addressed the UN Security Council about online regulation and countering hate speech online. He provided key recommendations to states and tech companies to better implement changes to reduce the volume of hate speech whilst ensuring that democratic rights of individuals are upheld.

Tech Policy

- Trolls will be jailed for ‘psychological harm’: Matt Dathan, Home Affairs Editor at The Times, explains how the UK’s Online Safety Bill will seek to prosecute those that post and share content online which is likely to cause psychological harm. This development of the Draft Online Safety Bill shows a movement away from the type from content, towards the effects and impacts of the content. The new section of the bill would allow imprisonment for up to two years if a message is deemed to have likely caused psychological harm, however the threshold for what is considered “likely to cause psychological harm” is yet to be detailed in the bill. (Dathan, The Times, 04.11.2021).

To read more about the creation and development of the Online Safety Bill and Tech Against Terrorism’s statement on the bill, see here.

Our Online Regulation Series Handbook includes an entry dedicated to the regulatory framework in the UK (p.107.116).

To read more about our consultation of the bill, see here. - So, What Does Facebook Take Down? The Secret List of ‘Dangerous’ Individuals and Organizations: The Intercept published a full list of the people and groups that Facebook deems as Dangerous Organisations and Individuals (DIO). This list is used for content moderation on the platform to remove content produced with official and supporter affiliations. Faiza Patel and Mary Pat Dwyer analyse the published list and determine that the list is dominated by Islamic violent extremists whereas the growing threat of far-right violent extremists is largely underrepresented. The list (which is supposedly guided by United States formal designation of terrorist entities) does not mention some designated groups, but does mention various undesignated groups and individuals. This leaves a significant gap of knowledge as to what is guiding the process of inclusion within the list. The article highlights that content published by groups designated in the US is not necessarily illegal under US law. Patel and Dwyer conclude that content moderation requires companies to balance various competing interests and any approach will likely draw both supporters and critics. To have an honest dialogue about these trade-offs and who bears the brunt of its content moderation decisions, the article states that Facebook needs to set aside the "distracting fiction" that U.S. law requires in its current approach. (Patel and Dwyer, Just Security, 01.11.2021)

To read more about online regulation and content moderation on platforms, see our Online Regulation Series.

For any questions or media requests, please get in touch via:

contact@techagainstterrorism.org

- News (240)

- Counterterrorism (54)

- Analysis (52)

- Terrorism (39)

- Online Regulation (38)

- Violent Extremist (36)

- Regulation (33)

- Tech Responses (33)

- Europe (31)

- Government Regulation (27)

- Academia (25)

- GIFCT (22)

- UK (22)

- Press Release (21)

- Reports (21)

- US (20)

- USA (19)

- Guides (17)

- Law (16)

- UN (15)

- Asia (11)

- ISIS (11)

- Workshop (11)

- Presentation (10)

- MENA (9)

- Fintech (6)

- Threat Intelligence (5)

- Webinar (5)

- Propaganda (3)

- Region (3)

- Submissions (3)

- Generative AI (1)

- Op-ed (1)