Our weekly review of articles on terrorist and violent extremist use of the internet, counterterrorism, digital rights, and tech policy.

Webinar Alert

- Thank you to everyone who tuned in for the first 2021 webinar of the TAT & GIFCT E-learning Webinar Series on, “The Nuts and Bolts of Counter Narratives: What works and why?”. If you were unable to attend and would like to access a recording please get in touch with us at contact@techagainstterrorism.org.

Agenda:- Sara Zeiger, Program Manager Research and Analysis, Hedayah

- Munir Zamir, PhD Candidate at the University of South Wales

- Tarek Elgawhary, Founder, Making Sense of Islam; CEO, Coexist Research International

- Ross Frenett, Founder & CEO, Moonshot CVE

- Moderators: Erin Saltman, Director of Programming, GIFCT; Anne Craanen, Research Analyst, Tech Against Terrorism

- Our next TAT & GIFCT E-learning Webinar Series, “APAC in Focus: Regional Responses to Terrorist and Violent Extremist Activity Online", will take place on Thursday, 24 June, 3pm BST. Stay tuned for the agenda announcement!

Tech Against Terrorism Updates

- Our Research Analyst and Policy Lead of the Terrorism Content Analytics Platform (TCAP), Anne Craanen, presented at the Researcher Ethics and Safety panel at GNET’s first annual Conference. She spoke about the ethical and security principles that guide the development of the TCAP and the wider work of Tech Against Terrorism. The recording will be released by GNET next week.

- The end of May marks the marks the 6-month anniversary of the TCAP alerts. Next week, we will publish the TCAP statistics for the first 6 months, provide an overview of the latest TCAP policies, and announce what's next for the development of the platform. We will do so next week through our office hours, our Twitter, and through our Newsletter. Don’t miss out on the TCAP 6-month anniversary: Register for our next TCAP office hours, follow us on Twitter, and sign up to our Newsletter.

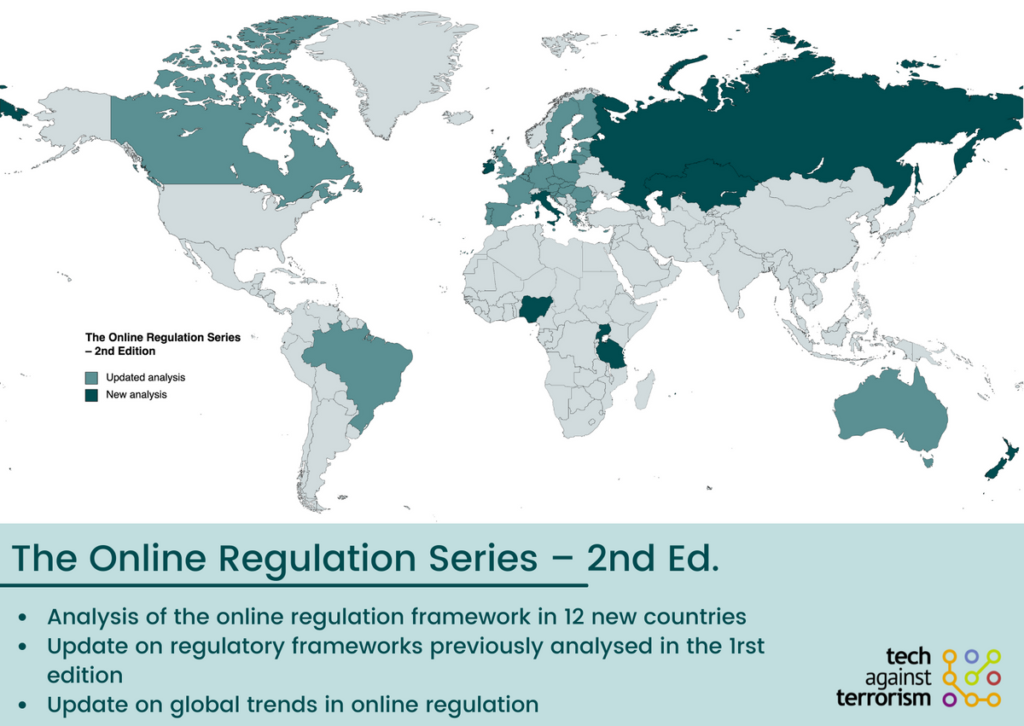

We are excited to announce that an updated version of the Knowledge Sharing Platform (KSP) will be re-launched to tech platforms soon. The KSP is a collection of interactive tools and resources designed to support the operational needs of smaller tech platforms. The KSP is a “one stop shop” for companies to access practical resources to support their counterterrorism and transparency efforts. It is a free platform which contains research and guidelines on topics including: policies and content standards, terrorist & violent extremist use of the internet, proscribed groups, online regulation, and transparency reporting. Stay tuned for further announcements about the launch date!

Top Stories

- This week, the Indian police visited a Twitter office in Dehli to serve notice, after the company labelled a tweet by ruling party Bharatiya Janata Party (BJP) spokesperson Sambit Patra as "manipulated media".

- WhatsApp filed a legal complaint contesting the 2021 Guidelines For Intermediaries And Digital Media Ethics Code Rules passed in 2021 by the Indian government, and specifically the requirement for messaging services to identify the “first originator of information” when demanded by authorities. WhatsApp argues that the requirement violates the privacy rights protected by the Indian Constitution.

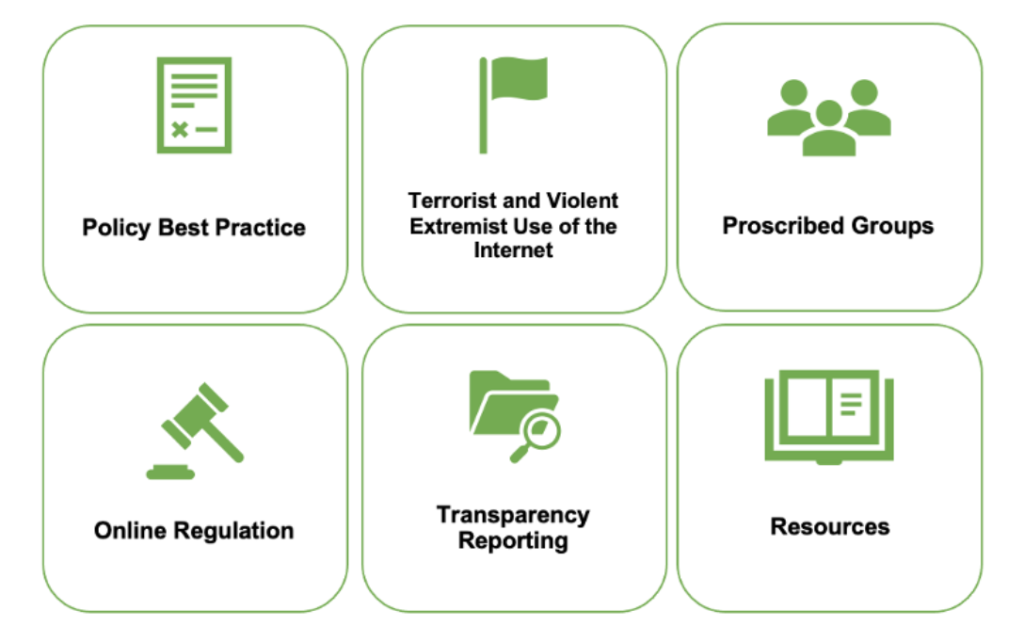

The 2021 Guidelines are based on the draft 2018 Guidelines, which also included a traceability requirement. We analysed the 2018 Guidelines in our 2020 Online Regulation Series blog post, which you can read here.

We have published a thread on Twitter discussing this here.

- The governor of Florida signed a law giving the state the power to penalise social media companies when they ban political candidates. The law imposes penalties of up to $250,000 a day for a social media platform that bans a candidate. Read more on this in the summaries below.

- The Facebook Oversight Board overturned Facebook’s decision to remove a comment by a supporter of Russian opposition leader Alexei Navalny which called another user a “cowardly bot.”

- Facebook has announced that it will begin rolling out harsher punishments for individual accounts that repeatedly share false or misleading post. Under the new system, Facebook will “reduce the distribution of all posts” from people who routinely share misinformation, making it harder for their content to be seen by others on the service.

Tech Policy

- Florida’s New Social Media Law Will Be Laughed Out of Court: Gilad Edelman discusses Florida’s new social media law, which was signed by the Governor of Florida this week. This law gives the state of Florida power to penalise social media companies when they ban political candidates, and is the first state law regulating how platforms moderate content on their services. According to Edelman, it is “very hard to imagine any of these provisions ever being enforced”. He argues that the “Stop Social Media Censorship Act” violates both the US Constitution First Amendment and Section 230 of the Communications Decency Act, which generally hold online platforms immune from liability over their content moderation decisions. (Edelman, WIRED, 24.05.2021).

To read more about the First Amendment, Section 230, and online regulation in the United States, please see our 2020 Online Regulation Series blog on the United States here.

- Indonesia: Suspend, Revise New Internet Regulation: Human Rights Watch sheds light on the various issues of the Ministerial Regulation 5 (MR5) regulation, which came into force in November 2020 in Indonesia with little consultation. In a recent letter to Indonesia’s Minister of Communication and Information Technology, Human Rights Watch argued that the Indonesian government should suspend and substantially revise the regulation on online content to meet international human rights standards. Amongst other issues Human Rights Watch names, is the fact that companies are required to “ensure” that their platform does not contain or facilitate the distribution of “prohibited content,” which implies that they have an obligation to monitor content. Failure to do so can lead to blocking of the entire platform. The requirement to proactively monitor or filter content is both inconsistent with the right to privacy and likely to amount to prepublication censorship, Human Rights Watch claims. (Human Rights Watch, 21.05.2021).

- Brussels to Big Tech: Open up your algorithms — or else: Mark Scott sheds light on the news that the European Commission is expected to demand that Facebook, Google and Twitter alter their algorithms, as well as to prove that they have done so, to “stop the spread of online falsehoods”. Scott writes that under the new rules, Brussels will also require the firms to disclose how they are responding to the spread of disinformation on their platforms; what measures they are taking to either remove or demote specific content or accounts that promote falsehoods; and provide online users with greater transparency on how they are targeted with digital ads. (Scott, POLITICO, 24.05.2021).

- This week we’re listening to the Lawfare podcast “The Arrival of International Human Rights Law in Content Moderation”. In this episode, Evelyn Douek and Quinta Jurecic invited David Kaye to discuss the increasingly important role played by International Human Rights Law in content moderation and what it really means in practice.

For any questions, please get in touch via:

contact@techagainstterrorism.org

- News (240)

- Counterterrorism (54)

- Analysis (52)

- Terrorism (39)

- Online Regulation (38)

- Violent Extremist (36)

- Regulation (33)

- Tech Responses (33)

- Europe (31)

- Government Regulation (27)

- Academia (25)

- GIFCT (22)

- UK (22)

- Press Release (21)

- Reports (21)

- US (20)

- USA (19)

- Guides (17)

- Law (16)

- UN (15)

- Asia (11)

- ISIS (11)

- Workshop (11)

- Presentation (10)

- MENA (9)

- Fintech (6)

- Threat Intelligence (5)

- Webinar (5)

- Propaganda (3)

- Region (3)

- Submissions (3)

- Generative AI (1)

- Op-ed (1)